GSoC 2022 - Final Work Report

GSoC 2022 - Final Work Report

In this gist, I present my work during the Google Summer of Code (GSoC) 2022 program. In the project, I contributed code to a published book Probabilistic Machine Learning: An Introduction (book1) and an upcoming book Probabilistic Machine Learning: Advanced Topics (book2) by Kevin P. Murphy.

I mainly worked on two repositories: i) pyprobml (public): a repositiry containing most of the source code for the book; and ii) bookv2 (private): a repository containing source code of the book.

Highlights

- I worked on this project for 16 weeks and on an average 42 hours a week.

- I refactored the pyprobml repository to shift from

.pyfiles to.ipynbfiles containing code to reproduce figures. - I added automatic code testing via GitHub workflows to pyprobml.

- I generated code for several figures in “Gaussian processes” chapter.

- I developed a Bayesian optimization library in JAX called BIJAX.

- I co-authored a section (34.7 - Active learning) with Dr. Kevin P. Murphy.

- I learned advanced tools of JAX library.

.py to .ipynb conversion

Background

In earlier version of repo, code for each figure was stored in .py files and there were Jupyter notebooks, each per chapter, containing cells to execute each of those .py files. Link to those cells were stored in a private firestore database and a short link was given with each figure in book1.

There were several challanges with this setup:

- It was difficult to maintain and keep this setup up to date due to manual intervention needed at multiple places.

- Due to full

.pyfile execution, interactivity with code was minimal.

Solution

After brainstorming, we decided to move from .py file per figure to a self-contained .ipynb file per figure which alleviated the need of notebook per chapter. This also enabled readers to play with the notebook more interactively or view the cached figures in the notebook. In the final version, we have notebooks stored in folders renamed as chapter numbers and integrated with book without the need of firestore database.

Automatic code testing

Background

Code for any repository needs be up to date with packages it depends on. pyprobml depends on lots of packages and thus automatic GitHub workflow testing can be beneficial to test the code on every push.

Solution

I implemented majority part of workflow which: i) runs and chekcks all notebooks on every push; ii) statically checks if imported modules are installed/going to be installed locally or not; iii) checks code quality with black and iv) pushes some useful statistic to other branches e.g. auto_generated_figure. Following PRs are major in making these changes:

| URL | Title |

|---|---|

| PR 703 | Add workflow and jupyter-book setup |

| PR 764 | Split the workflow. Enable static module checker. |

| PR 795 | Combine and publish results from parallel workflows |

Figures for Gaussian processes chapter

Gaussian processes are related to my thesis topic and thus I wrote code and/or latexified several figures in the Gaussian processes chapter. Here is the list of figures generated by my code:

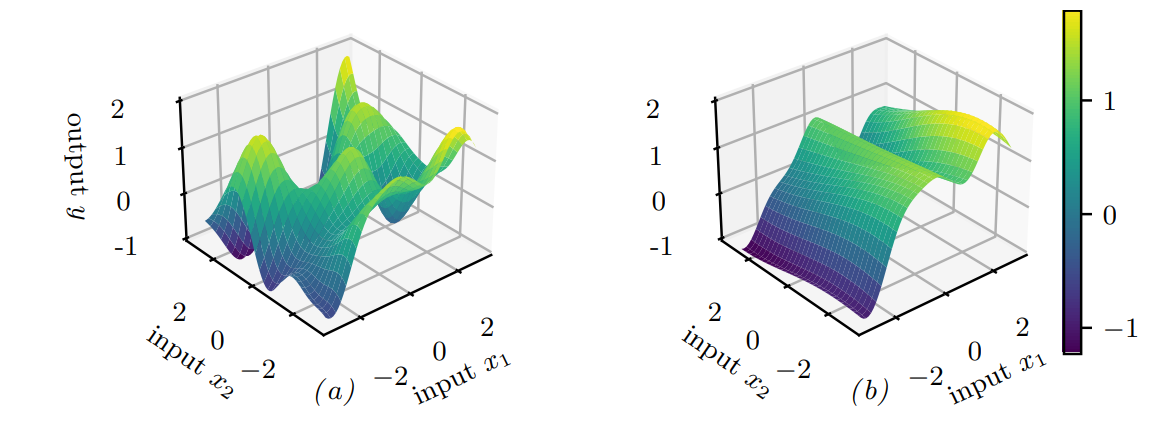

- Figure 18.2 [PR 727] explains ARD in Gaussian processes: a) same lengthscale for both dimensions; ii) lengthscale in one dimension dominates the other.

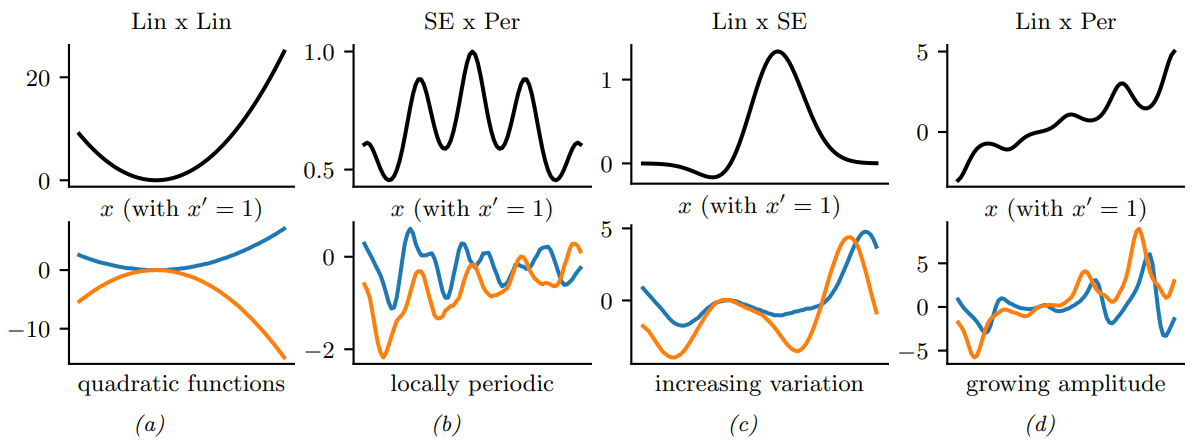

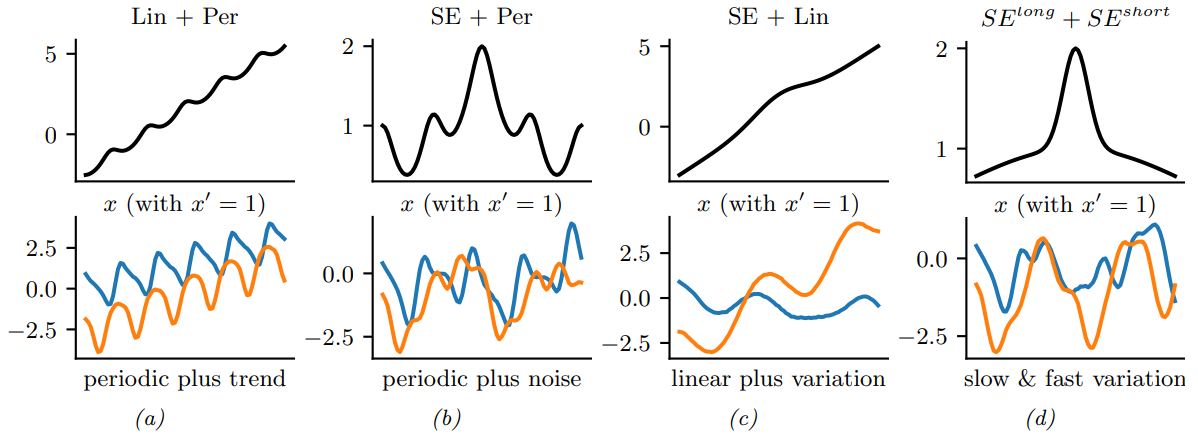

- Figure 18.5 and 18.6 [PR 721, PR 722] illustrates combination of GP kernels via multiplication and summation

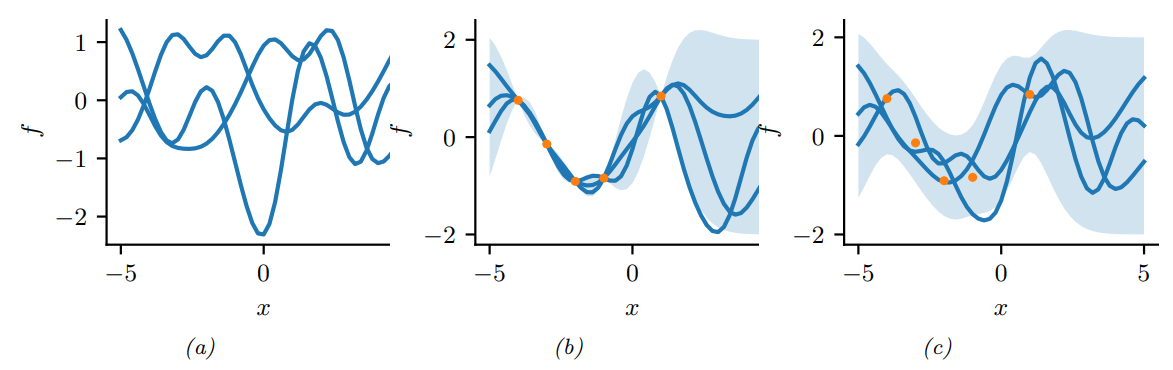

- Figure 18.7 [PR 728, PR 750] illustrates noisy and noiseless Gaussian process regression: a) samples from prior; b) noiseless regression; c) noisy regression.

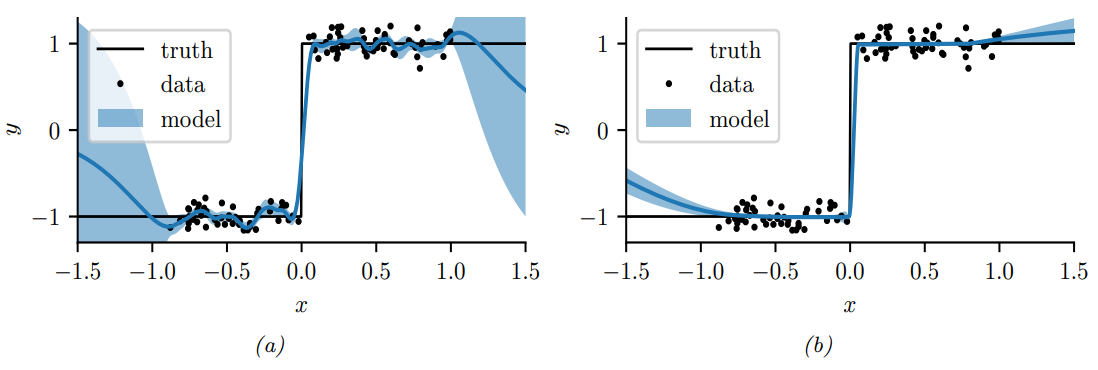

- Figure 18.18 [PR 919] illustrates GP regression with (a) Maximum likelihood and (b) Fully Bayesian inference. I added

blackjaxversion of code for this.

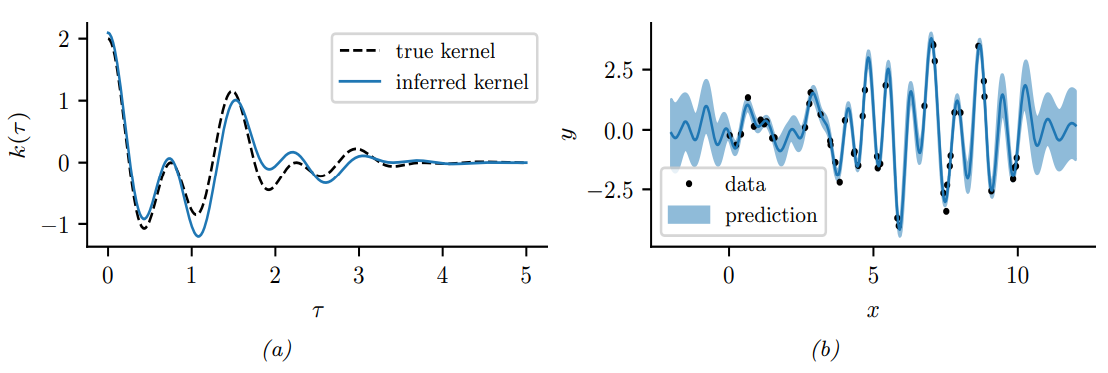

- Figure 18.23 [PR 874] illustrates spectral mixture kernel fit with GPs. I latexified this figure.

- Figure 18.26 [PR 871] illustrates Deep kernel learning with Gaussian processes: a) fit with Matern kernel; b) fit with MLP + Matern kernel. I latexifed this figure.

BIJAX: Bayesian Inference in JAX

We initially thought of writing a generic piece of code to generate multiple Bayesian inference related figures in book2. However, realizing the power of Bijactors (transformation functions) in recent methods like ADVI and a posibility of applications for a wider community, we are developing BIJAX as a full library. BIJAX supports MAP, ADVI and Laplace approximation. We are developing SteinVI and other methods in BIJAX at present.

Following are the figures developed with BIJAX:

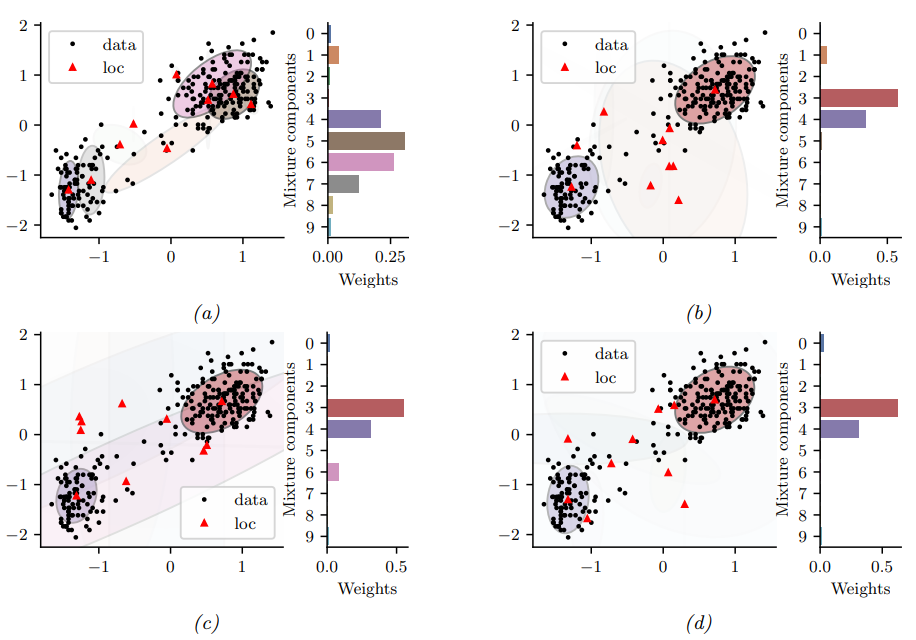

- Estimating mean of 2d Gaussian distribution with ADVI [PR 1081]

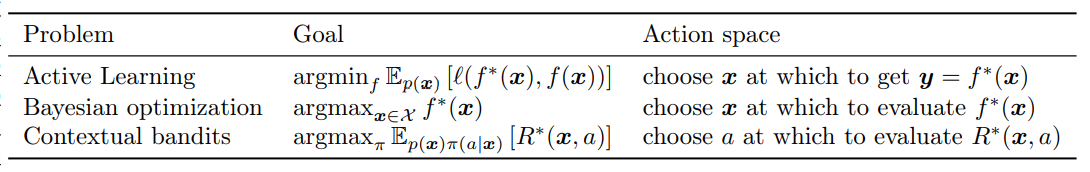

Co-authored section 34.7 - Active learning

We work on active learning in our lab and earlier I have published an interactive active learning article at VizxAI. Thus, I helped Dr. Murphy write the section on active learning in book2. I mentored two of non-GSoC students (Nitish and Ankita) from our lab at IIT Gandhinagar for generating all figures in this section.

JAX: an ML library in sync with pure Python

During GSoC, I got introduced to JAX for the first time. After some exploration, I found JAX as a quick and computationally fast prototyping library. Tools like vmap, jit, tree_util and flatten_util were elementry in the code I wrote during GSoC. I summarized the JAX tricks we learned during the GSoC period here.

Summary of PRs

Following table containts the list of PRs created during the GSoC period sorted by time.

| URL | Title |

|---|---|

| PR 690 | Update beta_binom_post_plot.py |

| PR 703 | Add workflow and jupyter-book setup |

| PR 704 | Added discrete_prob_dist_plot.ipynb |

| PR 707 | Do not auto generate scripts |

| PR 709 | Rename environment variables |

| PR 719 | Remove jupyter book |

| PR 721 | add combining_kernels_by_multiplication notebook |

| PR 722 | add combining_kernels_by_summation notebook |

| PR 727 | Fig18.2 (Book2) add gprDemoArd.ipynb |

| PR 728 | Fig18.7 (Book2) add gprDemoNoiseFreeAndNoisy.ipynb |

| PR 741 | converted py files to notebooks. |

| PR 750 | Update gprDemoNoiseFreeAndNoisy |

| PR 763 | Mention Python version in README |

| PR 764 | Split the workflow. Enable static module checker. |

| PR 779 | pin Python 3.7.13 in workflow |

| PR 780 | Copied d2l notebooks to pyprobml |

| PR 787 | Move non-figure notebooks to pyprobml |

| PR 795 | Combine and publish results from parallel workflows |

| PR 798 | Updated README.md and CONTRIBUTING.md |

| PR 800 | Copy probml-notebooks/notebooks to notebooks/misc |

| PR 805 | Push updated notebooks to misc |

| PR 824 | Add book2 notebooks |

| PR 834 | GitHub to colab |

| PR 846 | Add autogenerated contributors’ list |

| PR 871 | Refactor deep kernel learning figure |

| PR 874 | Fix workflow and latexify spectral mixture gp figures |

| PR 919 | Latexified and blackjaxified figure 17.18 gp_kernel_opt.ipynb |

| PR 1046 | AL notebooks added |

| PR 1081 | added gaussian 2d ADVI |

| PR 1084 | Added GMM ADVI notebook |

| PR 1099 | Latexify vib_demo |

Summary

This GSoC I worked on a big project useful to the academic community around the world and with a wonderful mentor Dr. Kevin Murphy. Simultaniously, I learned my new default ML library JAX and increased my confidence to be able to work on such large projects. I will always be grateful to Dr. Kevin Murphy for this opportunity that helped me add a unique and valuable experience in my PhD journy.